Practical 1: Simulating Observations with UFO¶

- In this Practical you will:

Learn how to download and run JEDI in a Singularity container

Acquaint yourself with the rich variety of observation operators now available in UFO)

Overview¶

The comparison between observations and forecasts is an essential component of any data assimilation (DA) system and is critical for accurate Earth System Prediction. It is common practice to do this comparison in observation space. In JEDI, this is done by the Unified Forward Operator (UFO). Thus, the principle job of UFO is to start from a model background state and to then simulate what that state would look like from the perspective of different observational instruments and measurements.

In the data assimilation literature, this procedure is often represented by the expression \(H({\bf x})\). Here \({\bf x}\) represents prognostic variables on the model grid, typically obtained from a forecast, and \(H\) represents the observation operator that generates simulated observations from that model state. The sophistication of observation operators varies widely, from in situ measurements where it may just involve interpolation and possibly a change of variables (e.g. radiosondes), to remote sensing measurements that require physical modeling to produce a meaningful result (e.g. radiance, GNSSRO).

So, in this tutorial, we will be running an application called \(H({\bf x})\), which is often denoted in program and function names as Hofx. This in turn will highlight the capabilities of JEDI’s Unified Forward Operator (UFO).

Along the way, we will also get to know the JEDI singularity container; we will run these applications without compiling any code - JEDI is already in the container and ready to go.

Step 1: Access your AWS Instance¶

As a padawan in this JEDI academy, you already have a compute node on the Amazon cloud waiting for you.

Specifically, we will launch an EC2 (Elastic Compute Cloud) instance through Amazon Web Services (AWS) for each participant in the Academy. And, this is where you will be running JEDI. Throughout the documentation, we will refer to this as your AWS instance, or equivalently, your AWS node.

Note

Some users may be tempted to run the activities on their own laptop or workstation or on an HPC platform if they have access to JEDI Environment modules. However, we discourage this. The use of AWS instances and Singularity containers allows us to provide a uniform computing environment for all so that we can focus on learning the JEDI code without the distraction of platform-related debugging.

So, to participate in the activities, the JEDI team will provide two things you will need:

The ip address of your AWS node

A password to allow you to log into it

To access your AWS node, open a web browser and navigate to this URL:

http://<your-ip-address>:8080

Enter your password at the prompt.

You should now see the default JupyterLab interface, which includes a directory tree displayed as an interactive (i.e. clickable) menu on the left and a large display area. At the top of the display area you should see several tabs. One is labeled Console 1. This is a Jupyter python console, capable of interpreting python commands. Another tab is labeled ubuntu with a local ip address. This is an ssh terminal, running bash. From here you have access to the linux command line. This is where we will be doing most of our work.

Step 2: Download and Enter the Container¶

Software containers are encapsulated environments that contain everything you need to run an application, in this case JEDI. JEDI containers are implemented by means of a container provider called Singularity.

Go into the ssh terminal and enter this to download the JEDI tutorial Singularity container from Sylabs Cloud (you can do this on any computer that has Singularity installed):

singularity pull library://jcsda/public/jedi-tutorial

You should see a new file in your current directory with the name jedi-tutorial_latest.sif. If you wish, you can verify that the container came from JCSDA by entering:

singularity verify jedi-tutorial_latest.sif

Now you enter the container with the following command:

singularity shell -e jedi-tutorial_latest.sif

To exit the container at any time (not now), simply enter

exit

If you have never used a software container before, you may want to take a moment to look around.

All the libraries you’ll need to run JEDI are installed in the container and ready to be used. For example, you can run this command to see the NetCDF configuration:

nc-config --all

The JEDI code is in the /opt/jedi directory. Have a look. The fv3-bundle directory contains the source code, organized into various repositories such as oops, ioda, saber, ufo, and fv3-jedi. And, the /opt/jedi/build directory contains the compiled executables and libraries.

Step 3: Setup¶

Now, the description in the Overview section gives us a good idea of what we need to run \(H({\bf x})\). First, we need \({\bf x}\) - the model state. In this tutorial we will use background states from the FV3-GFS model with a resolution of c48, as mentioned above.

Next, we need observations to compare our forecast to. The script to get these background and observation files is in the container. But, before we run it, we should find a good place to run our application. The fv3-bundle directory is inside the container and thus read-only, so that will not do.

So, you’ll need to copy the files you need over to your home directory that is dedicated to running the tutorial:

mkdir -p $HOME/jedi/tutorials

cp -r /opt/jedi/fv3-bundle/tutorials/Hofx $HOME/jedi/tutorials

cd $HOME/jedi/tutorials/Hofx

chmod a+x run.bash

We’ll call $HOME/jedi/tutorials/Hofx the run directory.

Now we are ready to run the script to obtain the input data (from the run directory):

./get_input.bash

You only need to run this once. It will retrieve the background and observation files from a remote server and place them in a directory called input. Have a look inside that directory to see the variety of observations that JEDI can now process. In this practical we will only be concerned with a small subset of these.

You may have already noticed that there is another directory in your run directory called config. Take a look. Here are a different type of input files, including configuration (yaml) files that specify the parameters for the JEDI applications we’ll run and fortran namelist files that specify configuration details specific to the FV3-GFS model.

Step 4: Run the Hofx application¶

Before running a JEDI application, it’s always a good idea to make sure your system has enough stack and virtual memory:

ulimit -s unlimited

ulimit -v unlimited

Now take a look at the run.bash script in the $HOME/jedi/tutorials/Hofx directory. This is what we will be using to run our Hofx application. Find the command that runs the fv3jedi_hofx_nomodel.x executable. We’ll be running these executables manually in the second practical.

When you are ready, try it out:

./run.bash

If you omit the arguments, the script just gives you a list of instruments that are available in this tutorial. For Step 2 we will focus on radiance data from the AMSU-A instrument on the NOAA-19 satellite:

./run.bash Amsua_n19

Skim the text output as it is flowing by. Can you spot where the quality control (QC) on the observations is being applied?

Step 5: View the Simulated Observations¶

You’ll find the graphical output from Step 2 in the output/plots/Amsua_n19 directory.

You can view the images by navigating to that directory in the JupyterLab interface and clicking on the image files.

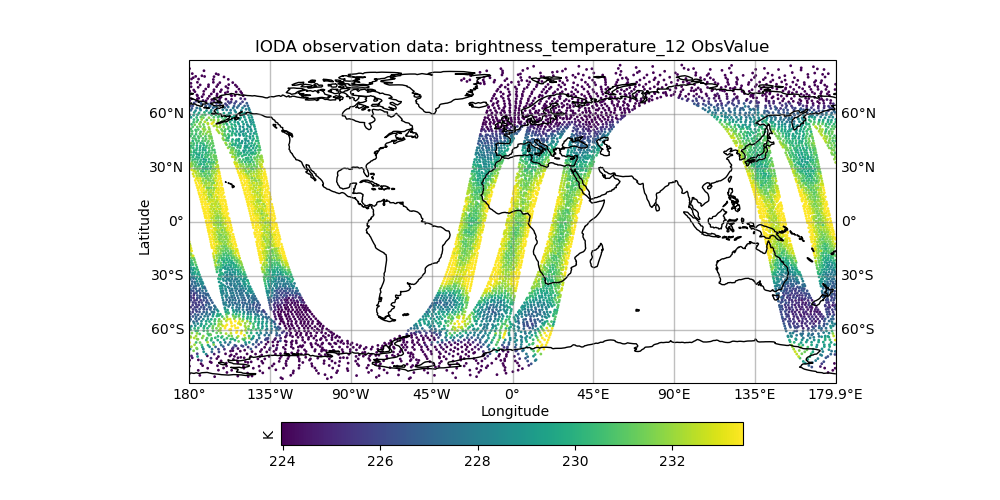

For example, try clicking on brightness_temperature_12_ObsValue.png. The plot should look something like this:

This shows temperature measurements over a 6-hour period. Each band of points corresponds to an orbit of the spacecraft.

Now look at some of the other fields. The files marked with ObsValue correspond to the observations and the files marked with hofx represent the simulated observations computed by means of the \(H({\bf x})\) operation described above. This forward operator relies on JCSDA’s Community Radiative Transfer Model (CRTM) to predict what this instrument would see for that model background state.

The files marked omb represent the difference between the two: observations minus background. In data assimilation this is often referred to as the innovation and it plays a critical role in the forecasting process; it contains newly available information from the latest observations that can be used to improve the next forecast. To see the innovation for this instrument over this time period, view the brightness_temperature_12_latlon_ombg_mean.png file.

If you are curious, you can find the application output in the directory called output/hofx. There you’ll see 12 files generated, one for each of the 12 MPI tasks. This is the data from which the plots are created. The output filenames include information about the application (hofx3d), the model and resolution of the background (gfs_c48), the file format (ncdiag), the instrument (amsua), and the time stamp.

Step 6: Explore¶

The main objective here is to return to Steps 2 and 3 and repeat for different observation types. Try running another observation type and look at the results in the output/plots directory. A few suggestions: look at how the aircraft observations trace popular flight routes; look at the mean vertical temperature and wind profiles as determined from radiosondes; discover what observational quantities are derived from Global Navigation Satellite System radio occultation measurements (GNSSRO); revel in the 22 wavelength channels of the Advanced Technology Microwave Sounder (ATMS).